本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/p/12114.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

EditPlus连接Linux系统远程操作文件

EditPlus是一套功能强大的文本编辑器!

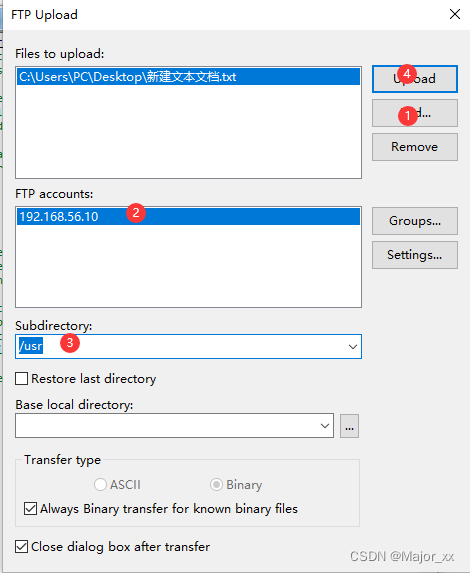

1.File ->FTP->FTP Settings;

2.Add->Description->FTP server->Username->Password->Subdirectory->Advanced Options 注意:这里的Subdirectory设置的是以后上传文件的默认…

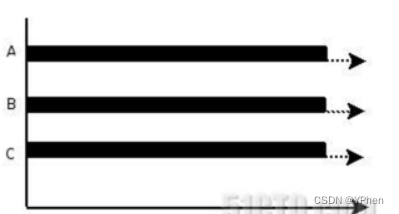

GO学习之 多线程(goroutine)

GO系列

1、GO学习之Hello World 2、GO学习之入门语法 3、GO学习之切片操作 4、GO学习之 Map 操作 5、GO学习之 结构体 操作 6、GO学习之 通道(Channel) 7、GO学习之 多线程(goroutine) 文章目录 GO系列前言一、并发介绍1.1 进程和线程和协程1.2 并发和并行 二、goroutine介绍三…

Day10-作业(SpringBootWeb案例)

作业1:完成课上预留给大家自己完成的功能 【部门管理的修改功能】

注意:

部门管理的修改功能,需要开发两个接口: 先开发根据ID查询部门信息的接口,该接口用户查询数据并展示 。(一定一定先做这个功能) 再开发根据ID…

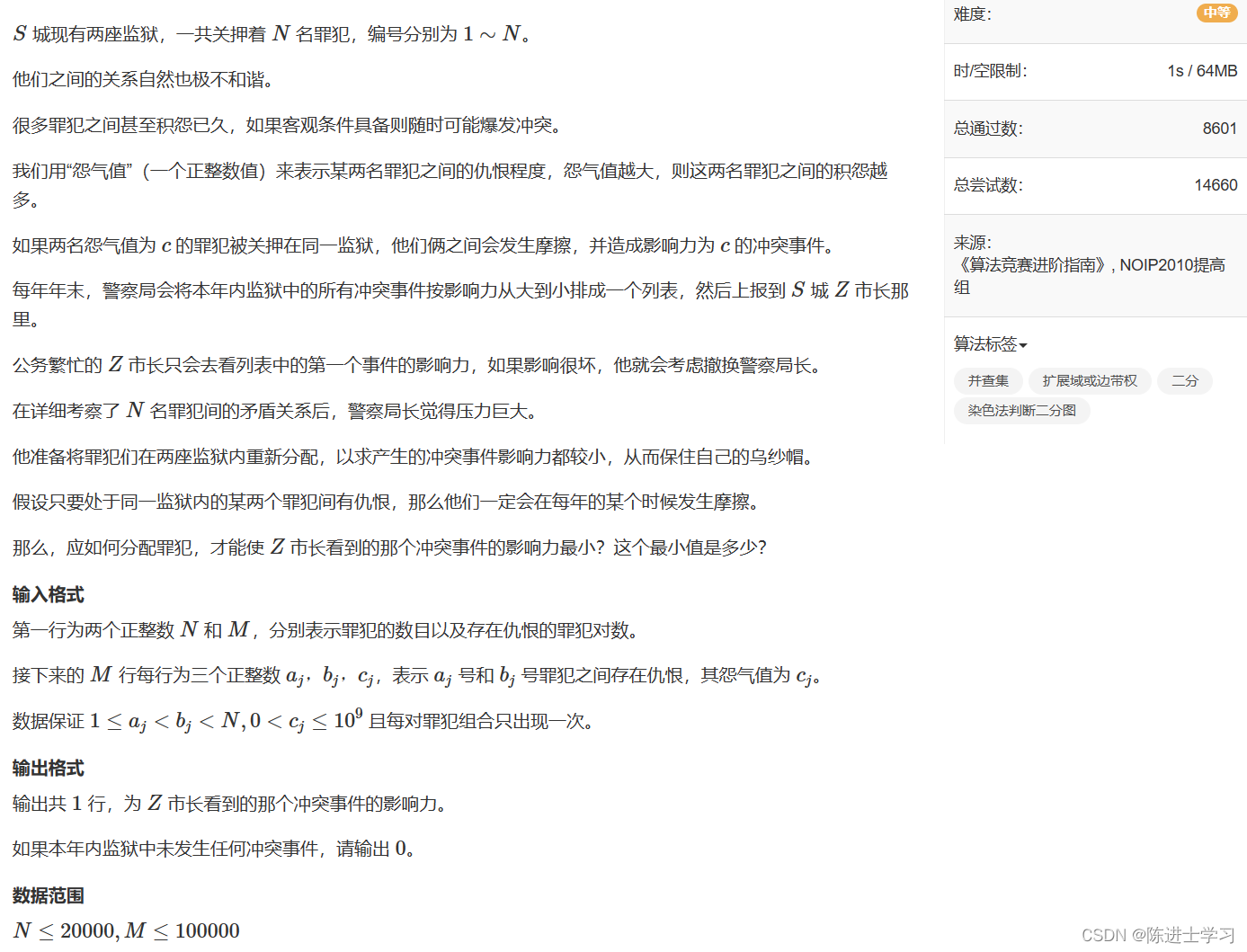

AcWing257. 关押罪犯(二分图+染色法)

输入样例:

4 6

1 4 2534

2 3 3512

1 2 28351

1 3 6618

2 4 1805

3 4 12884输出样例:

3512

解析: 二分,每次查看是否是二分图

#include<bits/stdc.h>

using namespace std;

typedef long long ll;

const int N2e45,M2e55…

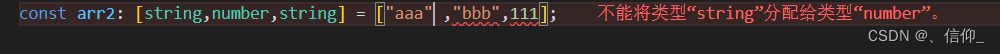

Typescript中的元组与数组的区别

Typescript中的元组与数组的区别

元组可以应用在经纬度这样明确固定长度和类型的场景下

//元组和数组类似,但是类型注解时会不一样//元组赋值的类型、位置、个数需要和定义的类型、位置、个数完全一致,不然会报错。 // 数组 某个位置的值可以是注解中的…

Ariadne’s Thread-使用文本提示改进对感染区域的分割胸部x线图像

论文:https://arxiv.org/abs/2307.03942, Miccai 2023

代码:GitHub - Junelin2333/LanGuideMedSeg-MICCAI2023: Pytorch code of MICCAI 2023 Paper-Ariadne’s Thread : Using Text Prompts to Improve Segmentation of Infected Areas fro…

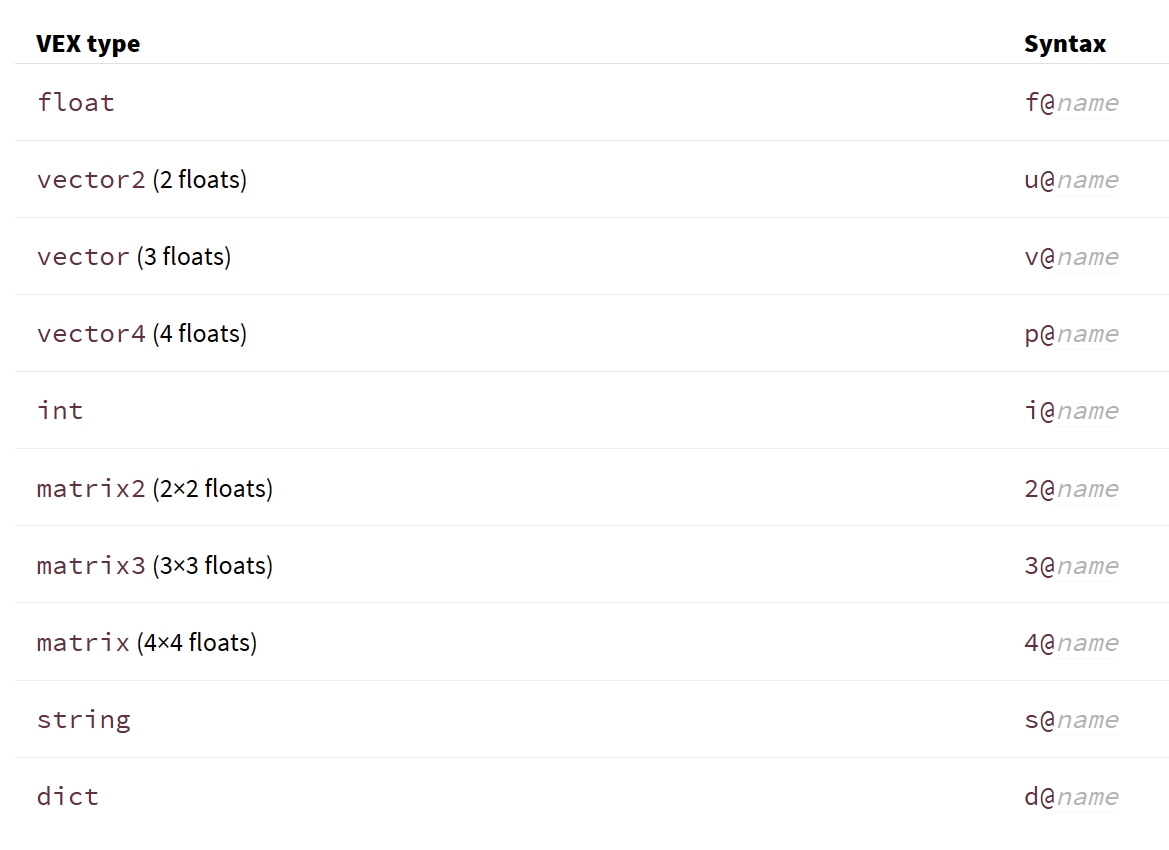

houdini vex中的属性类型(attribute)

https://www.sidefx.com/docs/houdini/vex/snippets.html#parameters

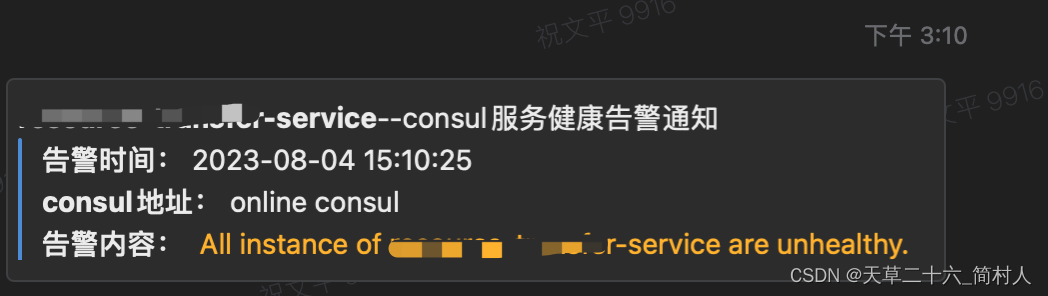

服务注册中心consul的服务健康监控及告警

一、背景

consul既可以作为服务注册中心,也可以作为分布式配置中心。当它作为服务注册中心的时候,java微服务之间的调用,会定期查询服务的实例列表,并且实例的状态是健康可用。

如果发现被调用的服务,注册到consul的…

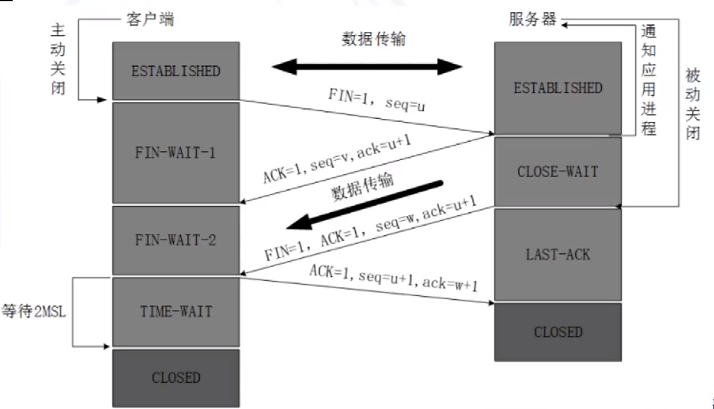

深入理解TCP三次握手:连接可靠性与安全风险

目录

导言TCP简介和工作原理的回顾TCP三次握手的目的和步骤TCP三次握手过程中可能出现的问题和安全风险为什么TCP三次握手是必要的?是否可以增加或减少三次握手的次数?TCP四次挥手与三次握手的异同点

导言 在网络通信中,TCP(Tra…

嵌入式入门教学——C51

一、前期准备

1、硬件设备 2、软件设备 二、预备知识

1、什么是单片机?

在一片集成电路芯片上集成微处理器、存储器、IO接口电路,从而构成了单芯片微型计算机,及单片机。STC89C52单片机: STC:公司89:所属…

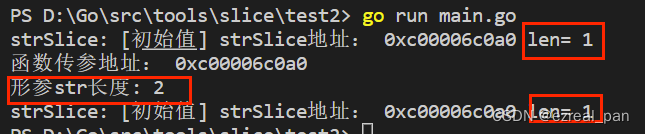

golang函数传参——值传递理解

做了五年的go开发,却并没有什么成长,都停留在了业务层面了。一直以为golang中函数传参,如果传的是引用类型,则是以引用传递,造成这样的误解,实在也不能怪我。我们来看一个例子,众所周知…

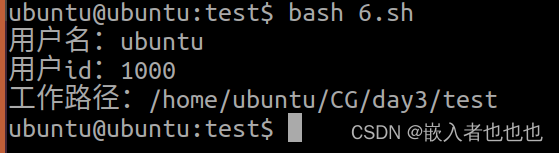

DAY3,C高级(shell中的变量、数组、算术运算、分支结构)

1.整理思维导图; 2.判断家目录下,普通文件的个数和目录文件的个数; 1 #!/bin/bash2 arr1(ls -la ~/ | cut -d r -f 1 | grep -w -)3 arr2(ls -la ~/ | cut -d r -f 1 | grep -w d)4 echo "普通文件个数:${#arr1[*]}"5 e…

接口测试前置基础学习

网址结构(面试重点)

网址就是浏览器请求的地址。

网址组成:(6个部分)

1 协议http协议,超文本传输协议,https协议,s表示ssl加密。传输更安全。

2 域名:就是ip地址。从…

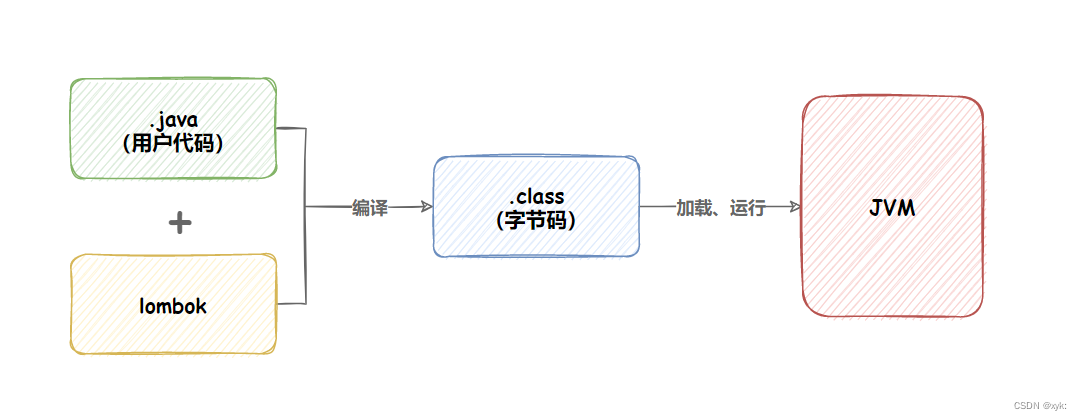

【SpringBoot】日志是+基于lombok的日志输出

博主简介:想进大厂的打工人博主主页:xyk:所属专栏: JavaEE进阶 在我们日常的程序开发中,日志是程序的重要组成部分,想象⼀下,如果程序报错了,不让你打开控制台看⽇志,那么你能找到报错的原因吗…

uniApp 插件 Fvv-UniSerialPort 使用实例

接上一篇 uniApp 对接安卓平板刷卡器, 读取串口数据 , 本文将详细介绍如何使用插件读取到串口数据

原理

通过uniApp 插件读取设备串口数据, 解析后供业务使用;

步骤

创建uniApp 项目;添加插件 安卓串口通信 Fvv-UniSerialPort 安卓串口通信 Fvv-UniSerialPort - DCloud 插件…

Stable Diffusion 使用教程

环境说明: stable diffusion version: v1.5.1python: 3.10.6torch: 2.0.1cu118xformers: N/Agradio: 3.32.0 1. 下载 webui

下载地址: GitHub stable-diffusion-webui 下载

根据自己的情况去下载: 最好是 N 卡:(我的…

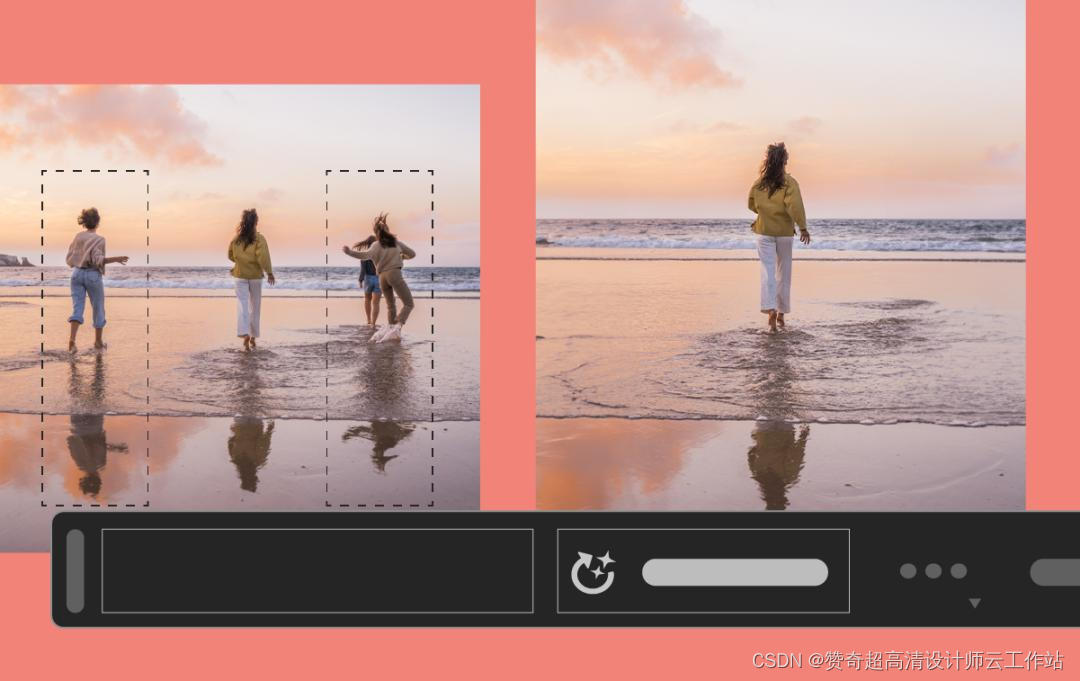

PS加上AI究竟有多炸裂?是不是有了它就秒变大神?

2023最火的关键词,大概就是AI了。

自年初ChatGPT展现出超预期能力之后,AI技术再次成为全民焦点,国内外科技巨头积极参与到这场由AI引发的产业变革中,相继开发并发布了一大批AI工具。

设计行业的老大哥Adobe也没落队,…

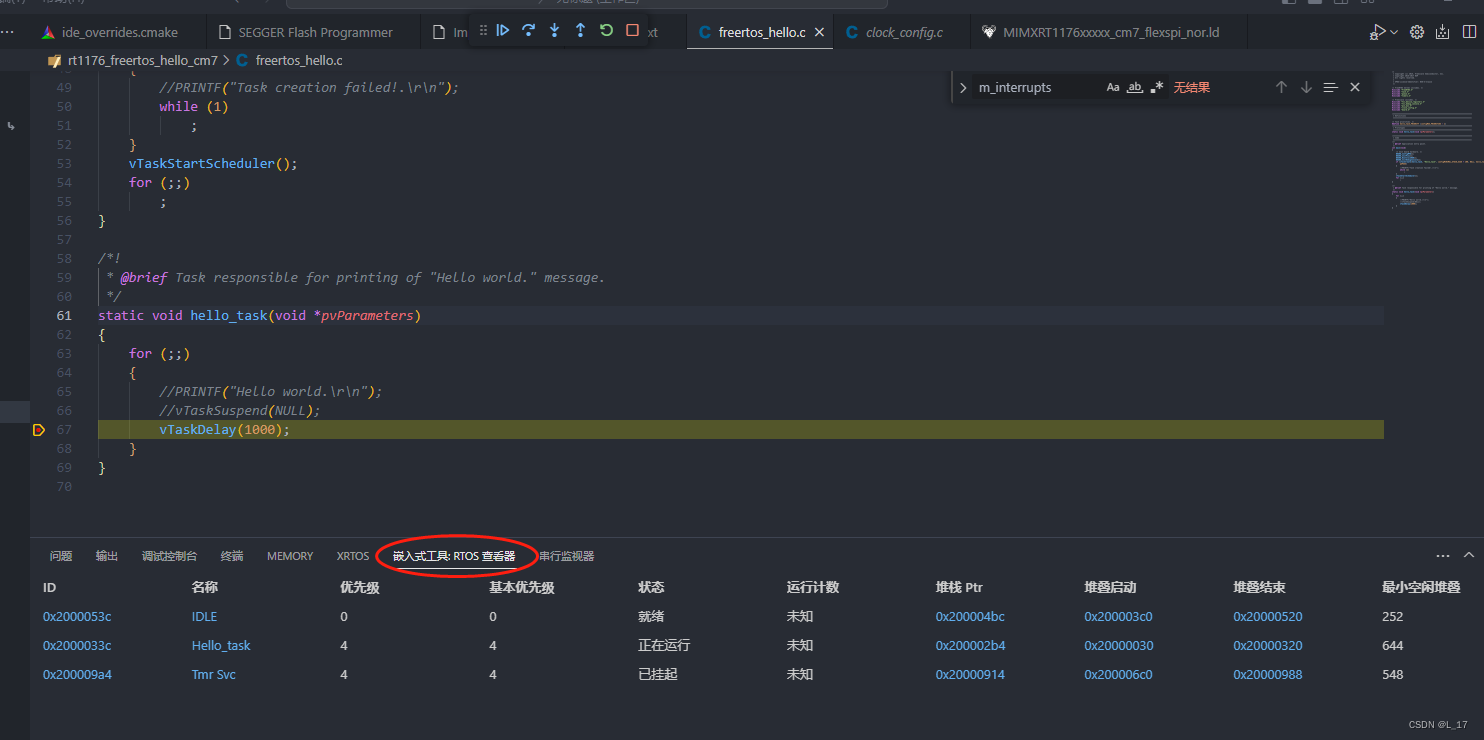

MCUXpresso for VS Code -- 基于VSCode开发RT1176

MCUXpresso for VS Code 是nxp推出插件,旗下MCX LPC, Kinetis和i.MX rt等MCU,都能在VS Code平台进行嵌入式开发。功能框图如下: 前期准备:

软件环境:

windows(实际可以跨系统,linux和mac没有测试)

VS Code

ninja

CMa…

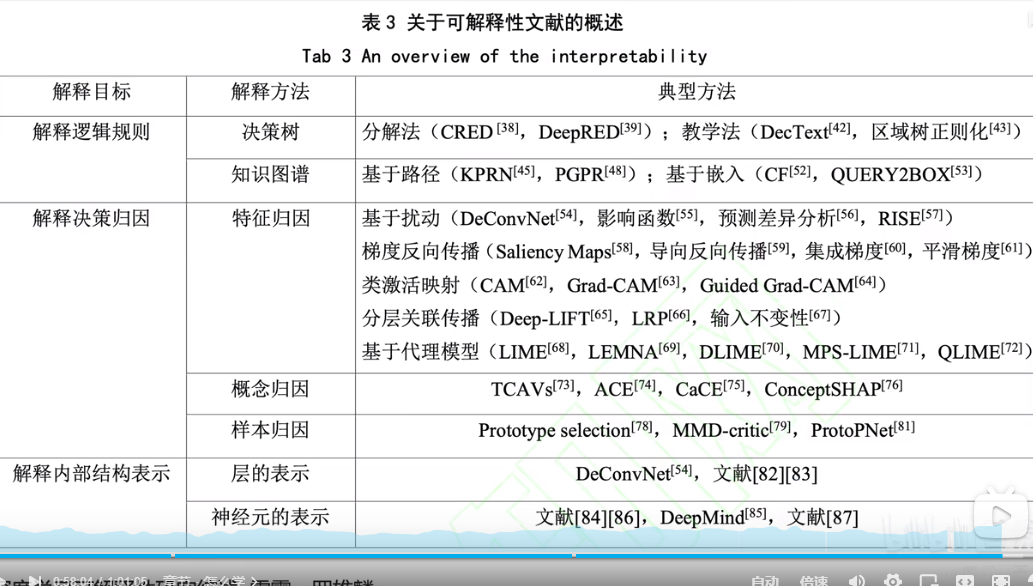

人工智能可解释性分析导论(初稿)

目录

思维导图 1.黑箱所带来的问题 2.从应用面论述为什么要进行可解释性分析

2.1可解释性分析指什么

2.2可解释性分析结合人工智能应用实例

2.3 可解释性分析的脑回路(以可视化为例如何) 3.如何研究可解释性分析

3.1使用好解释的模型 3.2传统机器学…

自然语言处理: 第六章Transformer- 现代大模型的基石

理论基础

Transformer(来自2017年google发表的Attention Is All You Need (arxiv.org) ),接上面一篇attention之后,transformer是基于自注意力基础上引申出来的结构,其主要解决了seq2seq的两个问题:

考虑了原序列和目…